A few weeks ago, I was the target of an online harassment campaign calling 2026 a target after my story about Brigitte Bardot’s long history of racism and Islamophobia went viral among all the wrong people. Unfortunately, this was still far from my first rodeo. Over the course of my decade-long career in digital media, I’ve become accustomed to seeing my DM requests filled with vile fatphobia, anti-Semitism, and just plain misogyny as I use my platform to express more or less progressive views.

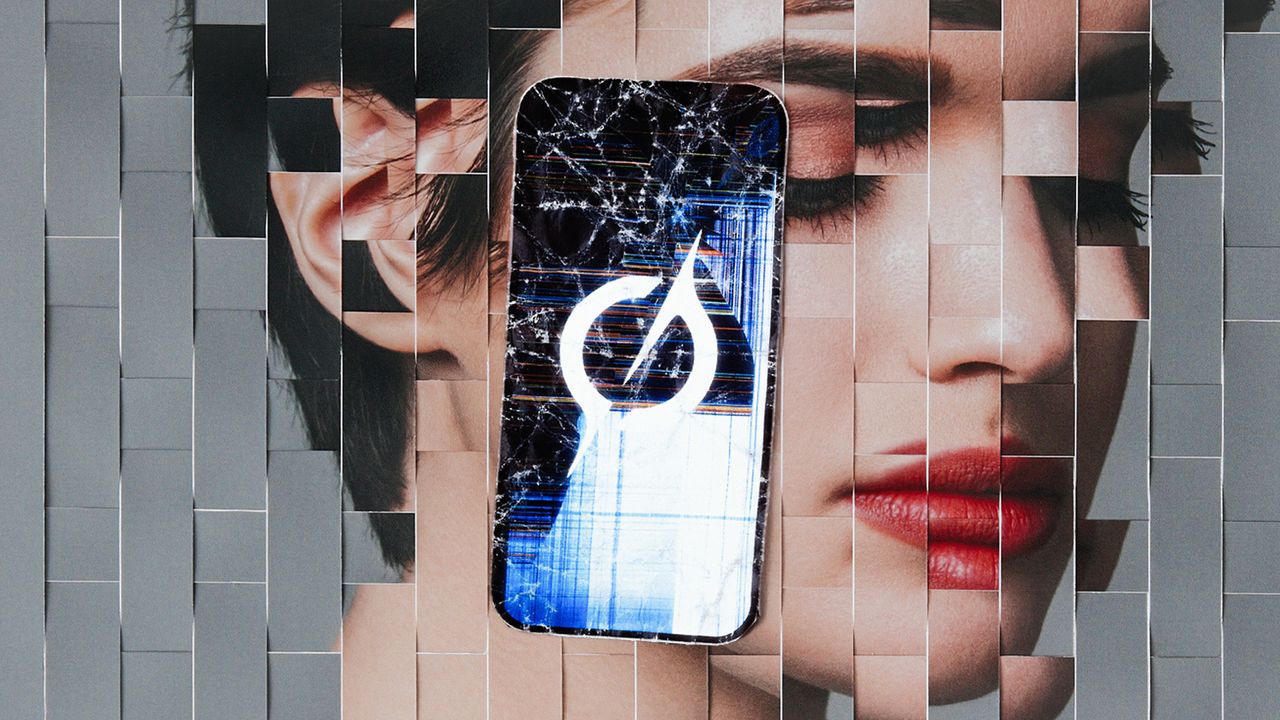

But this time the online hatred has taken on a new dimension. A few days into this series of events, I experienced the deeply disturbing phenomenon of being the subject of sexually explicit Grok Deepfakes. In a toxic trend that emerged on X late last year, those who disagreed with my Bardot article used Elon Musk’s controversial AI tool to create images of me in a bikini.

At first, I tried not to let it get to me. As I joked at an open mic a few days later, “Obviously, stripping digitally isn’t the best option, but I also… hate trying on swimsuits, so this saved me the hassle of going to a plus-size swimsuit store in Burbank called Qurves with a Q.” But what happened was hard to get over.

The truth is, it could be worse. Many of the women most targeted by Grok deepfakes are OnlyFans creators and other sex workers, whose victims see no difference between paying someone to intentionally upload an image of themselves versus using artificial intelligence to generate the image. And then there are Grok’s most stomach-churning applications: creating a deepfake of Renée Nicole Good (a Minneapolis mother of three who was recently killed by ICE officers), for example, or stripping children in a way that makes me so sick I can barely think straight.

Ashley St. Clair, the mother of one of Musk’s children, recently claimed that Grok was used to doctor photos of her as a minor. “The worst thing for me is seeing myself taking off my clothes, bending over, and then my kid’s backpack appears in the background,” she shared cbs morning show. When she asked the tool to remove the offending images, “Grok said, ‘I confirm that you disagree. I will no longer be producing these images.’ And then it continued to produce more and more images, more and more explicit images.”

Sadly, the distribution of sexually explicit images online without the person’s consent is nothing new — revenge porn has been around in some form for decades — and Grok’s case represents “the first time deepfake technology (Grok) has been combined with an instant publishing platform (X),” victims’ rights attorney Carrie Goldberg told reporters. Fashion. “The ability to publish frictionlessly enables deepfakes to spread at scale.” Although outcry against Grok came quickly, eventually leading to X limiting the tool’s photo editing capabilities, for many users the damage had already been done.

However, that’s not to say Grok’s targets don’t have recourse. Advocacy groups such as the Rape, Abuse, and Incest National Network (RAINN) have made clear that platforms’ ability to generate sexually explicit content has legal consequences. “AI companies are not acting as content publishers. They are create “Thus, victims harmed by AI-generated nudity can go directly to AI companies for help,” Goldberg said. Additionally, companies like the App Store and Google Play that act as distributors of deepfake technology could find themselves in trouble if they are sued because of their status as distributors of their products. “