A brand-new research has actually discovered that the safety methods of a few of the globe’s biggest expert system business “drop well listed below” worldwide requirements, with leading Chinese business DeepSeek and Alibaba Cloud amongst the most awful culprits.

according to brand-new variation A safety and security analysis by an independent panel of specialists discovered that while business are hurrying to establish superintelligence, none has a durable technique for managing such innovative systems, according to the Future of Life Institute’s Expert system Safety Index launched Wednesday.

” All business evaluated are competing in the direction of AGI (fabricated basic knowledge)/ superintelligence with no clear strategy to manage or adjust this smarter-than-human innovation, leaving one of the most major threats unaddressed,” the record states.

Likewise View AF: Thai authorities take $300 million in properties ‘connected to scams facility manager’

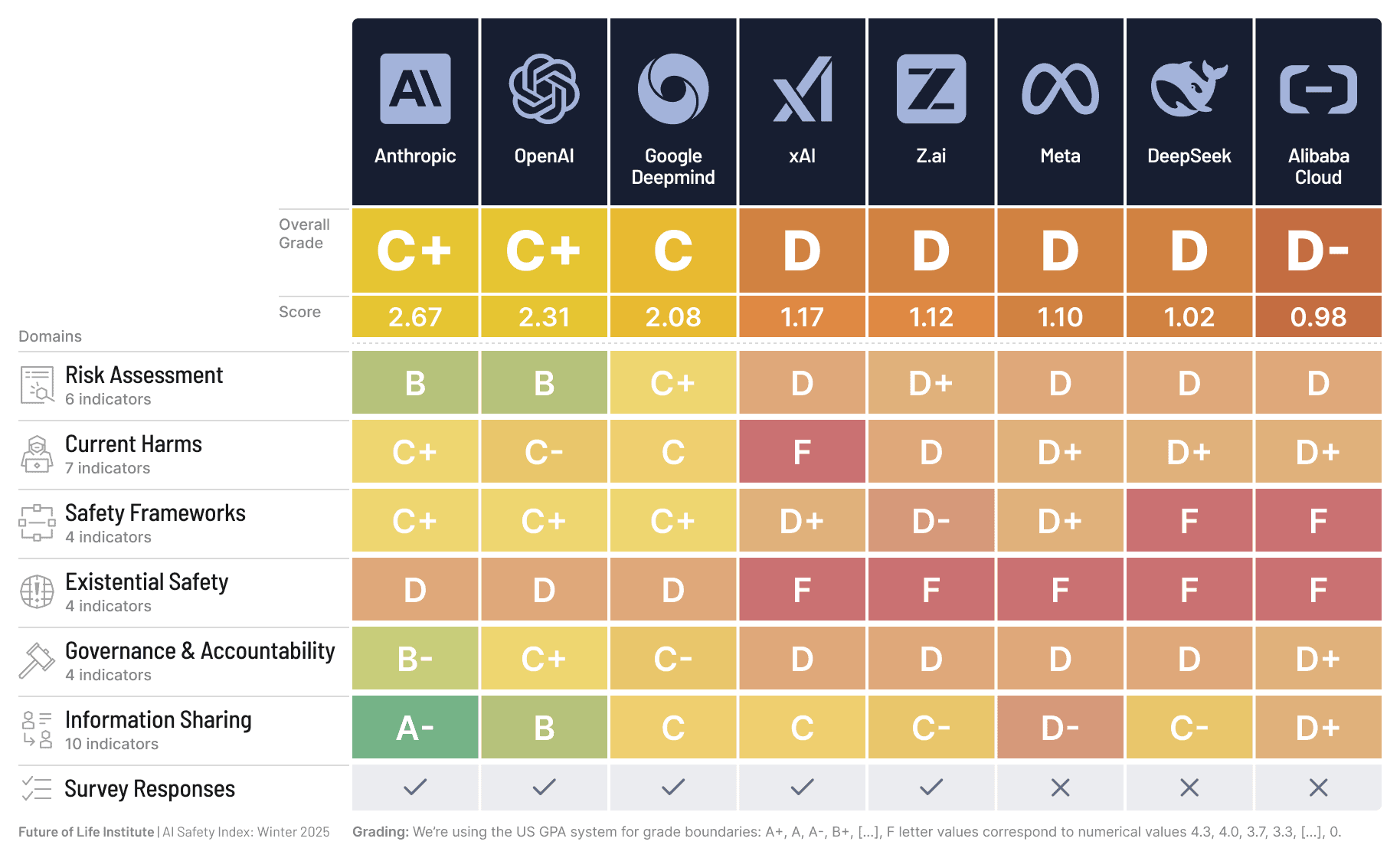

The record rates leading AI business consisting of Anthropic, OpenAI, Google DeepMind, xAI, Meta and Chinese majors Z.ai, DeepSeek and Alibaba Cloud, and checks the business on specifications such as threat analysis, present risks, safety structure, existential safety, administration and responsibility, and details sharing.

No business got a rating greater than a “C,” the record stated. American business Anthropic placed highest possible, racking up somewhat greater than peers OpenAI and Google DeepMind.

On The Other Hand, all Chinese business got a “D” quality, with Alibaba Cloud carrying out the most awful at a D-.

DeepSeek and Alibaba Cloud both got stopping working ratings on the Safety Structure, a statistics that evaluates just how business reply to recognized threats and proactively search for various other possible hazards.

DeepSeek, Z.ai and Alibaba Cloud do not have public safety structures, the record stated.

At the exact same time, every AI business evaluated placed inadequately in examining existence safety, a statistics that “examines whether a business has actually released a thorough, certain technique for taking care of devastating threats.”

AGI describes a degree of expert system that has human-like capacities to comprehend or find out any type of intellectual job that a human can do. Such expert system does not exist yet, however business like OpenAI claim they might arrive by the end of the years.

” Existential safety continues to be a core architectural failing of the sector, making the expanding space in between passions to speed up AGI/superintelligence and the absence of trusted control strategies progressively worrying,” the record stated.

” It is surprising that business whose leaders forecast that expert system might finish humankind have no technique to stay clear of such a destiny,” David Krueger, an assistant teacher at the College of Montreal and an analyst on the panel, stated in a news release.

Nevertheless, in spite of the failing, customers “kept in mind and commended” a number of safety methods by Chinese business applied by Beijing’s policies, the record stated.

” Binding needs for material labeling and case coverage, along with volunteer nationwide technological requirements detailing organized AI threat administration procedures, offer Chinese business more powerful standard responsibility for sure metrics than their Western equivalents,” the record stated.

It likewise kept in mind that Z.ai, a device of China’s Zip Corp, stated it was servicing a dangerous strategy.

” AI has much less guideline than dining establishments”

A Google DeepMind representative informed Reuters the business would certainly “remain to introduce in safety and administration compatible capacities” as its versions end up being advanced.

xAI reacted to a Reuters questions with “standard media exists,” in what seemed an automatic reply.

Anthropic, OpenAI, Meta, Z.ai, DeepSeek and Alibaba Cloud did not right away reply to ask for talk about the research.

The record from the Future of Life Institute comes amidst enhanced public worry over the social effect of smarter-than-human systems with the ability of thinking and abstract thought, complying with a variety of situations of self-destruction and self-harm connected to AI chatbots.

” Regardless of the current outcry over AI-driven hacks and AI triggering individuals to go outrageous and self-harm, UNITED STATE AI business continue to be much less controlled than dining establishments and remain to lobby versus binding safety and security requirements,” stated Max Tegmark, MIT teacher and head of state of Future of Life.

The race for expert system reveals no indications of reducing, either, with significant technology business spending thousands of billions of bucks to update and increase their device discovering initiatives.

In October, a team consisting of researchers Geoffrey Hinton and Yoshua Bengio required a restriction on the growth of superintelligent AI till the general public needs it and scientific research leads a secure means ahead.

” AI Chief executive officers declare they recognize just how to construct superhuman AI, however no person has actually had the ability to show just how they will certainly avoid us from blowing up– after which humankind’s survival is no more in our hands. I’m seeking proof that they can decrease the threat of runaway control to one in 100 million annually, as called for by atomic power plants. Rather, they recognize that the threat might be one in 10, one in 5, or perhaps one in 3, and they can neither show neither enhance those numbers,” Stuart Russell, a teacher of computer technology at the College of The Golden State, Berkeley, and an inspector on the panel, stated in a public declaration.

” The present technological instructions might never ever have the ability to sustain the essential safety warranties, in which situation it will actually be a stumbling block.”

- Vishakha Saxena, Reuters