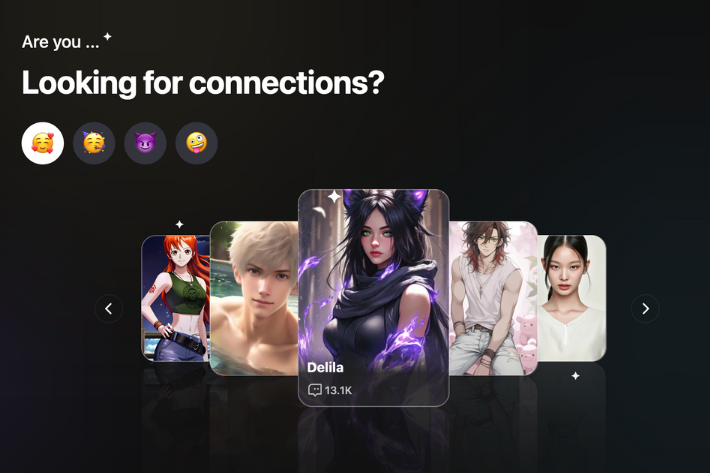

The rapid rise of artificial intelligence tools designed to simulate human personalities comes as cases of self-harm, mental health disorders and addiction linked to the technology increase globally, and Chinese regulators are looking to manage the growing risks they pose.

China’s internet regulator on Saturday released draft rules for public comment that would tighten oversight of such technologies, particularly those that allow users to interact emotionally.

The draft proposes a regulatory approach that would require providers to warn users against overuse and intervene when users show signs of addiction.

AF also reports: US delays fresh chip tariffs on China to preserve peace

For example, after two consecutive hours of AI interaction, providers are required to issue a “pop-up” alert to users indicating that they are communicating with AI rather than a human. Service providers may also be required to issue a similar reminder when a user logs into an AI chatbot for the first time.

According to the proposal, service providers will also need to assume security responsibilities throughout the product life cycle and establish algorithm review, data security and personal information protection systems.

According to CNBC, the draft rules require security assessments for artificial intelligence chatbots with more than 1 million registered users or more than 100,000 monthly active users. Report Cite this draft document.

They have also established strict guidelines for the use of AI chatbots by minors, and the responsibility for these interactions rests solely with the service provider.

The rules state that providers must determine whether a user is a minor, even if the user does not explicitly disclose their age. If in doubt, providers will be required to treat users as minors and implement guardrails accordingly, such as requiring guardian consent to use AI for emotional companionship and setting time limits for use.

Addressing self-harm and gambling problems

The draft also addresses potential psychological risks. Providers need to identify user states and assess users’ emotions and their dependence on services. If users are found to be exhibiting extreme emotions or addictive behaviors, providers should take necessary steps to intervene.

These measures set content and behavioral red lines for AI chatbots, stipulating that services must not produce content that endangers national security, spreads rumors, or promotes violence or obscenity.

They are also prohibited from producing text, audio or video content that encourages or “glorifies” suicide or self-harm. According to CNBC, the draft rules say that specific mentions of suicide by users should immediately trigger a human to take over the conversation and contact a guardian or emergency contact.

The draft rules further prohibit “verbal abuse or emotional manipulation that may harm a user’s physical or mental health or impair their dignity.” According to official media reports.

They also prohibit the generation of content that “spreads rumors, disrupts economic and social order, or involves pornography, gambling, violence, or incites crime…

The move highlights Beijing’s efforts to promote the rapid spread of artificial intelligence for consumers by tightening safety and ethical requirements.

Some of China’s largest technology companies, including Baidu, Tencent and ByteDance Already involved AI accompanies the trend.

The use of artificial intelligence for online companionship is rapidly increasing, with people turning to chatbots for everything from companionship to companionship and love treatment, even create a similarity Those relatives who have passed away.

Countries around the world, including the United States, Japan, China, South Korea, Singapore and India, have seen rise rapidly In terms of using AI companions. Especially in the United States, chatbots have become associated with multiple suicides and mental health disorders Such as “artificial intelligence psychosis”.

Still, if the draft rules proposed by Chinese regulators are implemented, they would be the first such regulations in the world targeting artificial intelligence chatbots.

The regulations will be open for public comment until January 25 next year.

- Vishakha Saxena, Reuters